Conceptualizing and launching a new flagship product feature in Sourcegraph

Organization

Sourcegraph

Role

Product Design Lead, Pseudo-Product Manager

Date

2020 – 2021

Sourcegraph is a universal code search and intelligence platform. More than 800,000 developers in some of the largest companies around the world rely on Sourcegraph to search across and navigate all of their company’s code on all of their code hosts.

During the period of 2020-2021, I worked together with Sourcegraph’s “Search Product” team to explore opportunities to increase the value of Sourcegraph to developers based on the existing Search product’s capabilities. We had a powerful product, but at a high level, there weren’t many “push” moments related to the product that would trigger a moment of engagement within their workflows; instead, we relied on developers remembering and reaching for Sourcegraph.

We had identified one feature area that already existed that delivered some level of a “push” moment: “saved searches & saved search notifications.” We knew that a couple of our Enterprise customers were using this feature, but adoption was low across the board and we didn’t know much about why these customers were using it—in fact, we weren’t certain how this feature had come about in the first place.

We recognized this as an open-ended problem space. As a designer, I kicked off and carried out a project which ultimately led to proposing, designing, and launching a new flagship product feature in Sourcegraph: Code Monitoring.

Discovery

To better understand the problem space we were working in, I planned a lean research effort to learn about the existing saved searches & saved search notifications feature, how it was being used, and the problems and opportunities surrounding it.

For context: with saved searches, developers were able to save a given search query for future use, rather than having to remember a complex search query involving many filters and complex pattern matching. Saved search notifications were tacked on top of these saved searches: at some point in the past, a customer had requested a way to receive a notification email when a given search query returned a different set of search results from when the search query was originally saved, and at each point those search results changed in the future.

Together with members of the engineering team and one of our product managers, I led the team in reviewing existing user and customer feedback, examining existing usage analytics data, and identifying folks to reach out to for user interviews within a few of our existing Enterprise customers.

I guided the team in capturing and synthesizing insights from these discovery activities. It wasn’t long before a picture became clear: these two concepts of “saved searches” and “saved search notifications” were two separate things that got tangled together, creating a series of conceptual and usability problems—and yet, we could see a big opportunity around how “saved search notifications” could provide value to our customers while creating a strong “push” moment into the product.

Definition

Sourcegraph’s globally-distributed remote team calls for thoughtful async-first communication and collaboration. Throughout the discovery and definition phases of this effort, I used artifacts like Google Docs, Excalidraw, and Figma as the basis for collaboration.

I created a living RFC for this effort, where we defined a set of problem statements and top problems, which would be used as the basis for design exploration.

A couple examples of the problem statements we defined include:

- We’ve observed that the “saved search” notification emails Sourcegraph sends today create an extra alerting system for users to consume that doesn’t provide the benefits already present in their existing alerting systems, which creates more “noise” without contributing to existing UI metrics or creating positive social pressures. If we expand the types of notification channels available to users to align with the channels they’re already using, it will make “saved search” notifications more valuable and usable for our users.

- We’ve observed that the functionality, purpose, and value of “saved searches” in relation to notifications isn’t clear or intuitive in the product, which makes it less likely our users will explore and adopt the feature. If we improve this, we’ll make it easier for users to understand the purpose and benefits of code monitoring and actions/notifications.

Some of the top problems include:

- The way “saved searches” are implemented today is not intuitive and blurs the purpose of the feature by combining the separate concepts of “saved search for easy re-use” and “code monitoring and notifications,” which makes it less obviously useful for users.

- Notifications can’t be consumed on Sourcegraph itself, which makes notifications hard to monitor and rely upon.

- The search types available for “saved search” queries that can result in notifications are limited to diffs and commits, but this isn’t clearly communicated, which makes the workflow for defining “saved search notifications” confusing.

Once we felt we had a good understanding of these problems and opportunities, we moved into low-fidelity design exploration.

Design

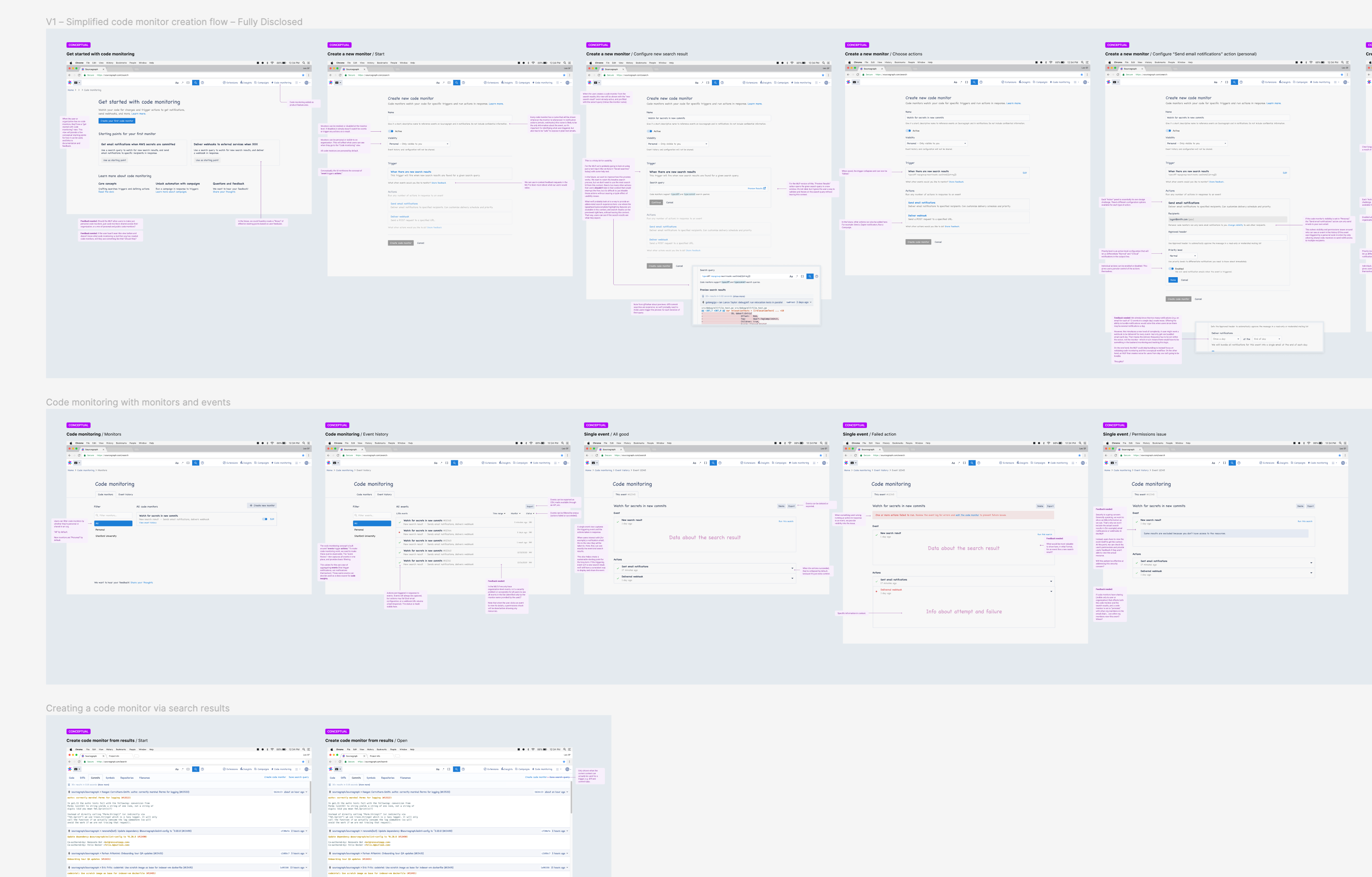

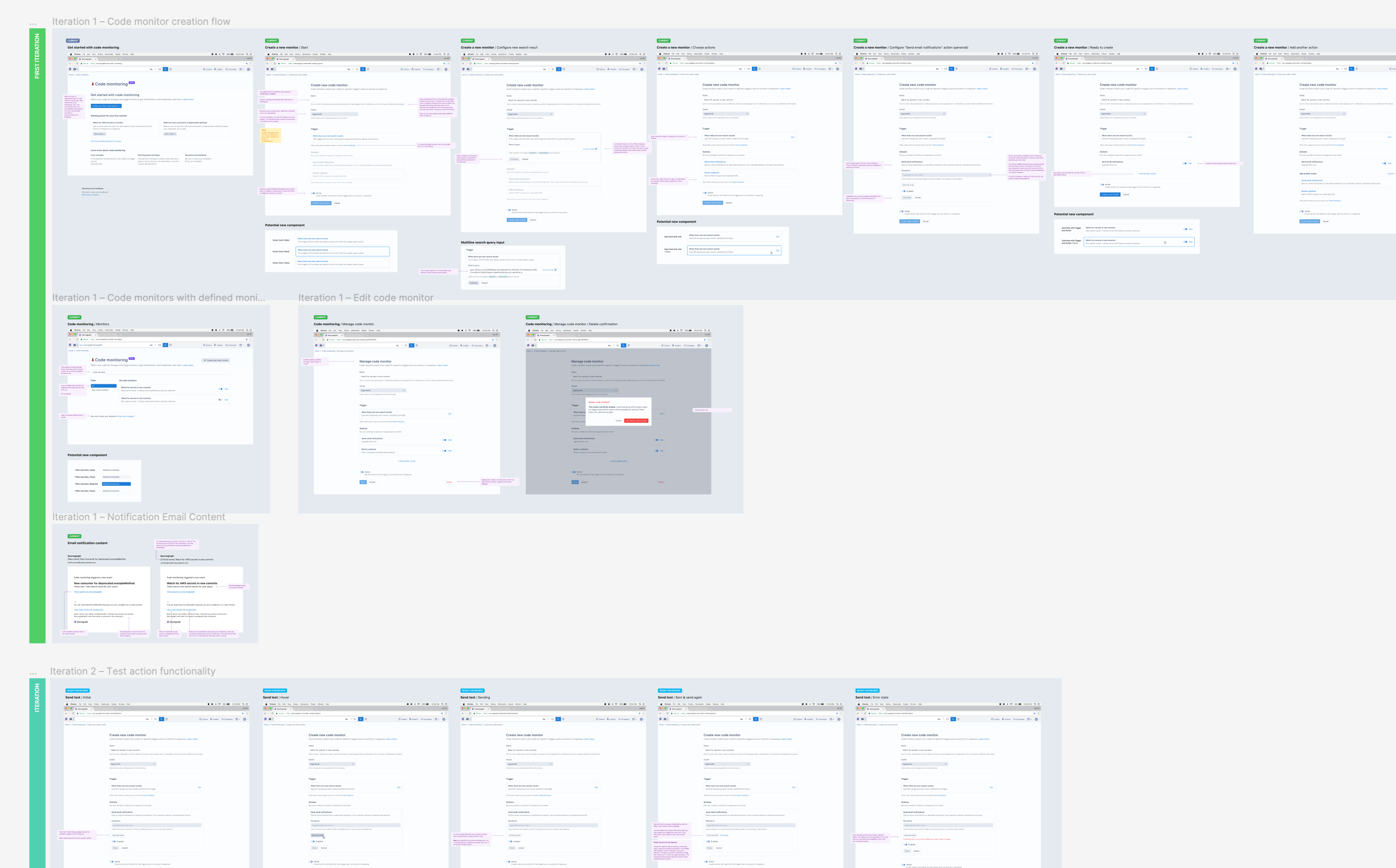

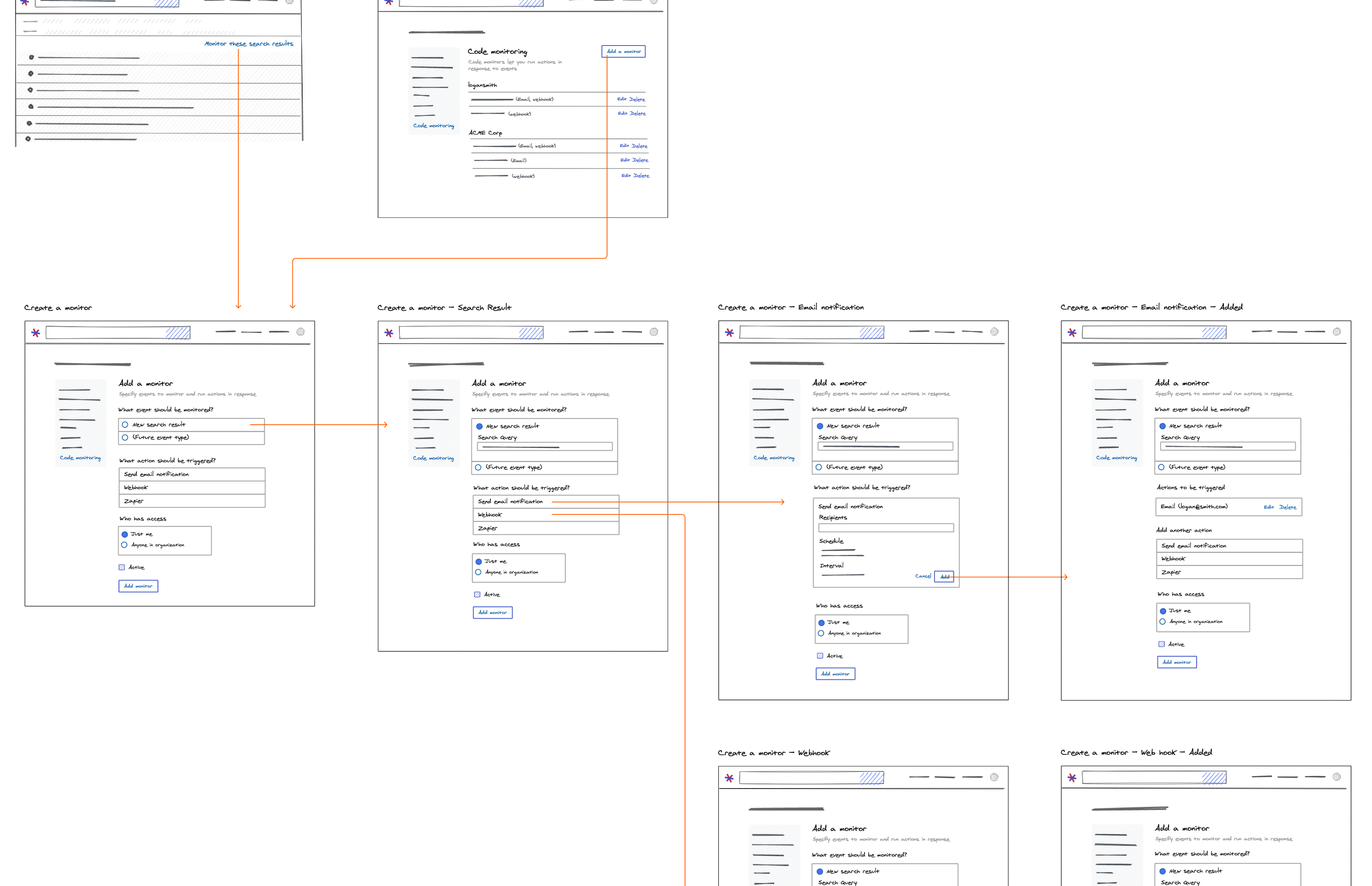

I oriented design exploration and collaboration around lower-fidelity artifacts like Excalidraw whiteboarding and wireframing in Figma. My priority at this moment was to engage the engineering team around the problem space and user workflows, rather than the specific visual details of the user interface. In Sourcegraph’s remote, async-first environment, it became all the more important to use these artifacts to capture our thinking, assumptions, and guide discussion.

One of the things I love about working in the lowest possible fidelity when in an open-ended problem space is how easily we can explore and blend together many different things—user flows, information architecture, concepts and mental models, and loose experimental interfaces.

Before too long, we found ourselves converging on an approach that felt like an effective and sustainable solution to the problem spaces: code monitoring would be a separate product feature area, helping developers to keep track of and get notified about changes in their code. Some specific existing use cases that we could solve in the earliest iteration would include getting notifications for potential secrets, anti-patterns, or common typos committed to a user’s codebase.

Conceptually, code monitors would be an entity, which would contain a single trigger, defined by a search query, and any number of actions that would be carried out in response.

With this underlying concept in place, I explored and prototyped the initial user interface—both to explore how the first iteration might be implemented, and to make sure the approach was systematically sustainable and presented a vision of the future. We took this prototype into testing with the folks we’d contacted during the discovery phase. Here, our goal was to validate that this solution effectively solved the problem they were using saved search notifications to solve today, check the conceptual model, and look for gaps or opportunities for further iterations.

Based on the outcomes of the testing and the team’s feedback and contributions, I then created a high-fidelity design artifact for the first iteration that captured all behaviours, logic, and assumptions.

Delivery

One of the challenges of working on a self-hosted product is the iteration cycle: a new version would be released each month, and any new features or experiments would only become available when a customer upgraded their instance to the latest version of Sourcegraph. This often means that we can’t get feedback on our ideas and efforts for several months after something ships.

Thankfully, we had a couple of customers who were hankering for code monitoring, especially after getting a sneak preview in our user testing. The engineering team built out the first version of Code Monitoring behind a feature flag, which let us ship it in the next release, and immediately start getting feedback.

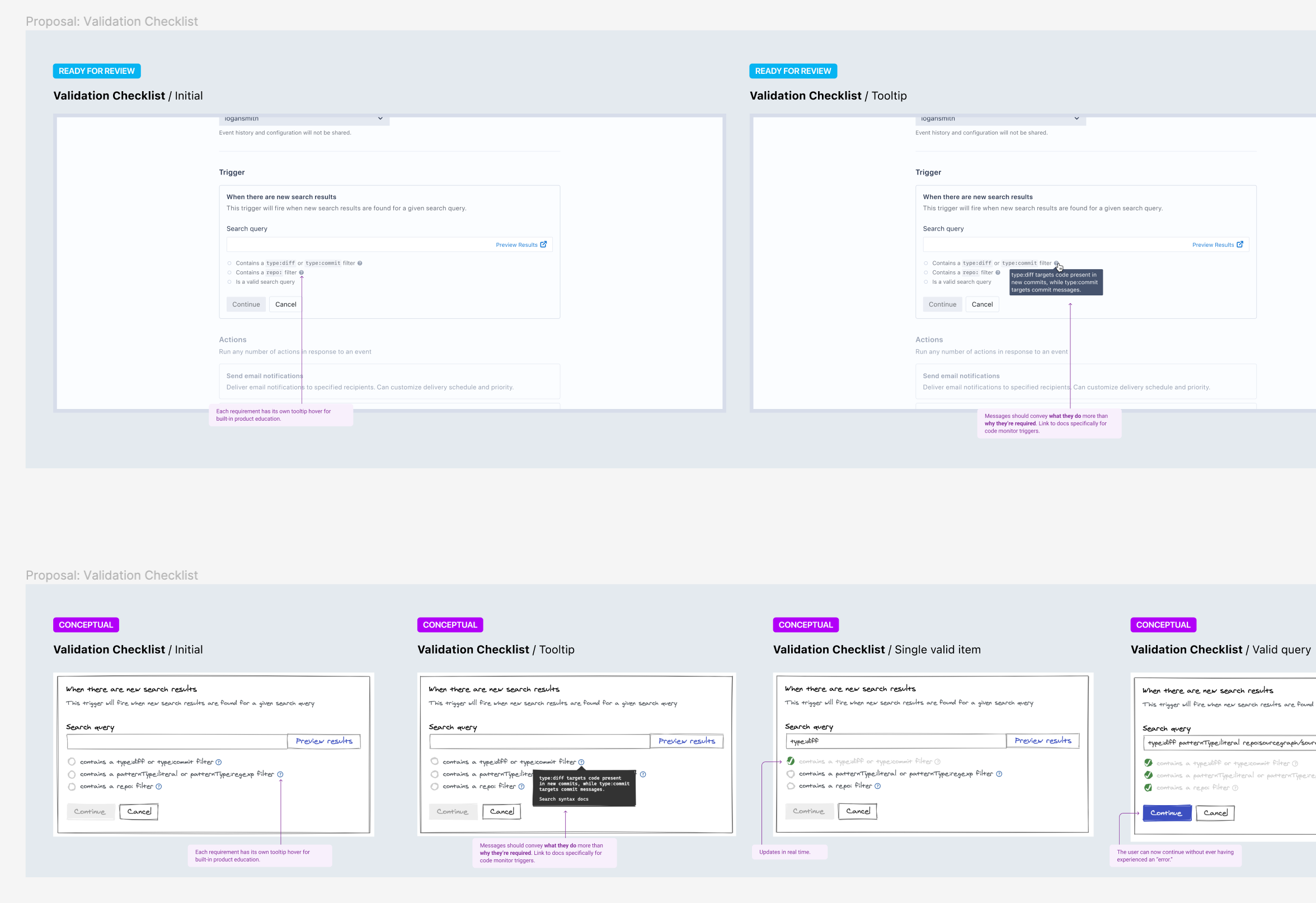

We learned quickly from these customers that we had a usability issue around crafting valid search queries to be used in triggers. Unlike everyday code searches, the search queries used in code monitors had a specific set of constraints, and it was easy to make mistakes. This led to a quick follow-up project where I ended up mirroring the “password criteria” pattern as a means of validating against several independent constraints.

Results

As a first iteration, code monitoring was quite successful. All of our customers who had been using saved search notifications migrated to code monitoring, and we quickly began to hear feedback about other notification channels and actions that they’d like to use. Through product analytics, we learned that code monitoring is used heavily when adopted by a given user: if they have one thing to monitor, they likely have many.

Our initial design approach formed the basis of a year-long feature roadmap, which built on top of the minimum lovable product to add things like webhooks, Slack notifications, expanded trigger query capabilities, and more, all ultimately unlocking additional value for existing and new customers.

Code monitoring as a product feature also became a key part of early sales conversations, particularly those oriented around security or code quality use cases, and is frequently mentioned as a contributor when landing new customers.